Pytorch分类模型转onnx以及onnx模型推理

(一)Pytorch分类模型转onnx

参考:

实验环境: Pytorch1.4 + Ubuntu16.04.5

1.Pytorch之保存加载模型

1.1 当提到保存和加载模型时,有三个核心功能需要熟悉:

1.torch.save:将序列化的对象保存到disk。这个函数使用Python的pickle实用程序进行序列化。使用这个函数可以保存各种对象的模型、张量和字典。

2.torch.load:使用pickle unpickle工具将pickle的对象文件反序列化为内存。

3.torch.nn.Module.load_state_dict:使用反序列化状态字典加载model's参数字典1.2 保存加载模型2种方式,在保存模型进行推理时,只需要保存训练过的模型的学习参数即可,一个常见的PyTorch约定是使用.pt或.pth文件扩展名保存模型。

# 第一种:保存和加载整个模型

Save:

torch.save(model_object, 'model.pth')

Load:

model = torch.load('model.pth')

model.eval()

#第二种:仅保存和加载模型参数(推荐使用)

Save:

torch.save(model.state_dict(), 'params.pth')

Load:

model = TheModelClass(*args, **kwargs)

model.load_state_dict(torch.load('params.pth'))

model.eval()

#记住,必须调用model.eval(),以便在运行推断之前将dropout和batch规范化层设置为评估模式。如果不这样做,将会产生不一致的推断结果

#在保存用于推理或恢复训练的通用检查点时,必须保存模型的state_dict

2.Pytorch分类模型转onnx

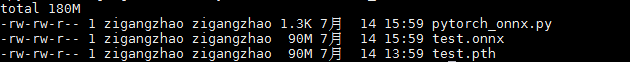

我的模型是调用resnet50训练的4分类模型,训练过程调用gpu,转换过程如下:

2.1 如果保存的是整个模型

import torch

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = torch.load("test.pth"

) # pytorch模型加载

batch_size = 1 #批处理大小

input_shape = (3, 244, 384) #输入数据,改成自己的输入shape

# #set the model to inference mode

model.eval()

x = torch.randn(batch_size, *input_shape) # 生成张量

x = x.to(device)

export_onnx_file = "test.onnx" # 目的ONNX文件名

torch.onnx.export(model

export_onnx_file,

opset_version=10,

do_constant_folding=True, # 是否执行常量折叠优化

input_names=["input"], # 输入名

output_names=["output"], # 输出名

dynamic_axes={"input":{0:"batch_size"}, # 批处理变量

"output":{0:"batch_size"}})

2.2 如果保存的是模型参数

import torch

import torchvision.models as models

torch_model = torch.load("test.pth") # pytorch模型加载

model = models.resnet50()

model.fc = torch.nn.Linear(2048, 4)

model.load_state_dict(torch_model)

batch_size = 1 #批处理大小

input_shape = (3, 244, 384) #输入数据,改成自己的输入shape

# #set the model to inference mode

model.eval()

x = torch.randn(batch_size, *input_shape) # 生成张量

export_onnx_file = "test.onnx" # 目的ONNX文件名

torch.onnx.export(model,

export_onnx_file,

opset_version=10,

do_constant_folding=True, # 是否执行常量折叠优化

input_names=["input"], # 输入名

output_names=["output"], # 输出名

dynamic_axes={"input":{0:"batch_size"}, # 批处理变量

"output":{0:"batch_size"}})

附注:模型转换工具

(二)onnx模型推理

1. ONNX简介:

2.下载安装onnxruntime和onnx

参考:

直接在命令行运行:

pip install onnx

pip install onnxruntime3.推理ONNX模型:

参考:

3.1 Code(推理成功):

# -*-coding: utf-8 -*-

import os, sys

sys.path.append(os.getcwd())

import onnxruntime

import onnx

import cv2

import torch

import numpy as np

import torchvision.transforms as transforms

class ONNXModel():

def __init__(self, onnx_path):

:param onnx_path:

self.onnx_session = onnxruntime.InferenceSession(onnx_path)

self.input_name = self.get_input_name(self.onnx_session)

self.output_name = self.get_output_name(self.onnx_session)

print("input_name:{}".format(self.input_name))

print("output_name:{}".format(self.output_name))

def get_output_name(self, onnx_session):

output_name = onnx_session.get_outputs()[0].name

:param onnx_session:

:return:

output_name = []

for node in onnx_session.get_outputs():

output_name.append(node.name)

return output_name

def get_input_name(self, onnx_session):

input_name = onnx_session.get_inputs()[0].name

:param onnx_session:

:return:

input_name = []

for node in onnx_session.get_inputs():

input_name.append(node.name)

return input_name

def get_input_feed(self, input_name, image_numpy):

input_feed={self.input_name: image_numpy}

:param input_name:

:param image_numpy:

:return:

input_feed = {}

for name in input_name:

input_feed[name] = image_numpy

return input_feed

def forward(self, image_numpy):

# image_numpy = image.transpose(2, 0, 1)

# image_numpy = image_numpy[np.newaxis, :]

# onnx_session.run([output_name], {input_name: x})

# :param image_numpy:

# :return:

# 输入数据的类型必须与模型一致,以下三种写法都是可以的

# scores, boxes = self.onnx_session.run(None, {self.input_name: image_numpy})

# scores, boxes = self.onnx_session.run(self.output_name, input_feed={self.input_name: iimage_numpy})

input_feed = self.get_input_feed(self.input_name, image_numpy)

scores, boxes = self.onnx_session.run(self.output_name, input_feed=input_feed)

return scores, boxes

def to_numpy(tensor):

return tensor.detach().cpu().numpy() if tensor.requires_grad else tensor.cpu().numpy()

r_model_path="/home/zigangzhao/DMS/mtcnn-pytorch/test0815/onnx_model/rnet.onnx"

o_model_path="/home/zigangzhao/DMS/mtcnn-pytorch/test0815/onnx_model/onet.onnx"

img = cv2.imread("/home/zigangzhao/DMS/mtcnn-pytorch/data_set/train/24/positive/999.jpg")

img = cv2.resize(img, 24, 24), interpolation=cv2.INTER_CUBIC)

# scipy.misc.imread 读取的图片数据是 RGB 格式

# cv2.imread 读取的图片数据是 BGR 格式

# PIL.Image.open 读取的图片数据是RGB格式

# 注意要与pth测试时图片读入格式一致

to_tensor = transforms.ToTensor()

img = to_tensor(img)

img = img.unsqueeze_(0)

------------------------------------------------------------------------------------

方法1:

rnet1 = ONNXModel(r_model_path)

out = rnet1.forward(to_numpy(img))

print(out)

------------------------------------------------------------------------------------

方法2:

rnet_session = onnxruntime.InferenceSession(r_model_path)

onet_session = onnxruntime.InferenceSession(o_model_path)

# compute ONNX Runtime output prediction

inputs = {onet_session.get_inputs()[0].name: to_numpy(img)}

outs = onet_session.run(None, inputs)

img_out_y = outs

print(img_out_y)

3.2 Resnet18官方模型测试:

'''

code by zzg 2021/04/19

ILSVRC2012_val_00002557.JPEG 289 --mongoose

import os, sys

sys.path.append(os.getcwd())

import onnxruntime

import onnx

import cv2

import torch

import torchvision.models as models

import numpy as np

import torchvision.transforms as transforms

from PIL import Image

def to_numpy(tensor):

return tensor.detach().cpu().numpy() if tensor.requires_grad else tensor.cpu().numpy()

def get_test_transform():

return transforms.Compose([

transforms.Resize([224, 224]),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

image = Image.open('./images/ILSVRC2012_val_00002557.JPEG').convert('RGB') # 289

img = get_test_transform()(image)

img = img.unsqueeze_(0) # -> NCHW, 1,3,224,224

print("input img mean {} and std {}".format(img.mean(), img.std()))

onnx_model_path = "resnet18.onnx"

pth_model_path = "resnet18.pth"

## Host GPU pth测试

resnet18 = models.resnet18()

net = resnet18

net.load_state_dict(torch.load(pth_model_path))

net.eval()

output = net(img)

print("pth weights", output.detach().cpu().numpy())

print("HOST GPU prediction", output.argmax(dim=1)[0].item())

##onnx测试

resnet_session = onnxruntime.InferenceSession(onnx_model_path)

#compute ONNX Runtime output prediction

inputs = {resnet_session.get_inputs()[0].name: to_numpy(img)}

outs = resnet_session.run(None, inputs)[0])

print("onnx weights", outs)

print("onnx prediction", outs.argmax(axis=1)[0])result:pth和onnx的权重有微小的差别,转换基本没有损失(只列出部分对比)

pth weights

[[-2.75895238e+00 -1.94232500e+00 -3.74886751e+00 -2.76631522e+00

-5.56726170e+00 1.02979660e-01 -1.53173268e-01 -2.12685680e+00

-2.15380117e-01 1.05095935e+00 9.06286120e-01 -3.54150224e+00

-2.77359426e-01 -4.46945143e+00 -1.67047071e+00 -1.43689775e+00

-3.06501412e+00 8.95503283e-01 -2.21714807e+00 -4.27185059e+00

-5.65821266e+00 -4.25675440e+00 -2.08176541e+00 -1.31338656e+00

2.22651696e+00 -1.12091851e+00 3.60926807e-01 -4.03066665e-01

-1.20702481e+00 1.77329823e-01 3.78770304e+00 1.21787858e+00

1.10849619e+00 -3.99422097e+00 -4.83004618e+00 -2.55381560e+00

-4.68239114e-02 -2.86062264e+00 2.69656086e+00 2.28774786e+00

.............................................................]]

HOST GPU prediction 289

--------------------------------------------------------------------------

onnx weights

[[-2.75895381e+00 -1.94232643e+00 -3.74886823e+00 -2.76631761e+00

-5.56726360e+00 1.02978483e-01 -1.53175250e-01 -2.12685800e+00

-2.15381145e-01 1.05095983e+00 9.06285942e-01 -3.54150319e+00

-2.77360559e-01 -4.46945238e+00 -1.67047083e+00 -1.43689895e+00

-3.06501722e+00 8.95501733e-01 -2.21714854e+00 -4.27185202e+00

-5.65821457e+00 -4.25675631e+00 -2.08176613e+00 -1.31338596e+00