MMagic实战上手

写在前面

本次笔记按照 子豪兄教程 完成。

同济子豪兄 Github MMagic教程 MMagic_Tutorials/0614

https:// github.com/TommyZihao/M Magic_Tutorials/tree/main/0614

MMagic简介

OpenMMLab主页: https://openmmlab.com

OpenXLab应用中心AIGC在线Demo: https:// beta.openxlab.org.cn/ap ps

MMagic主页: https:// github.com/open-mmlab/m magic

视频讲解:同济子豪兄 https:// space.bilibili.com/1900 783

- 支持 StableDiffusion tomesd 加速.

- 支持所有 inpainting/matting/image restoration 模型的 inferencer.

- 支持 animated drawings.

- 支持 Style-Based Global Appearance Flow for Virtual Try-On.

- 修复 pip install 时 inferencer 无法使用的问题.

多模态计算是指利用多种感知模态(例如图像、语音、文本等)进行综合分析和处理的技术。AIGC多模态则将人工智能(Artificial Intelligence,AI)与多模态计算相结合,通过机器学习和深度学习等技术来实现对多种感知模态数据的理解和处理。

AIGC多模态的应用领域非常广泛,包括但不限于以下几个方面:

- 多模态情感分析:利用多模态数据(例如面部表情、语音、文本)进行情感分析和情感识别,从而更全面地理解和解释人的情感状态。

- 视觉与语言交互:通过结合图像和自然语言处理技术,实现对图像内容的理解和描述,从而构建视觉与语言之间的强大交互能力。

- 多模态机器翻译:通过将图像和文本等多种模态的信息融合起来,改进机器翻译系统的质量和准确性。

- 跨模态检索:通过整合多种感知模态的数据,实现对不同模态数据之间的关联性分析和跨模态检索,例如从图像中检索相似的文本描述。

- 多模态推荐系统:结合用户的多种感知模态信息(如购买历史、浏览记录、语音指令等),提供更个性化和准确的推荐服务。

通过整合不同感知模态的信息,AIGC多模态旨在提供更全面、准确和丰富的分析和决策支持。它在改进人机交互、信息理解、情感分析、机器翻译等领域具有潜在的应用价值。

源码安装MMagic

!git clone https://github.com/open-mmlab/mmagic.git # 下载 mmagic 源代码

!pip3 install -e .

# 检查 mmagic

import mmagic

print('MMagic版本', mmagic.__version__)

# MMagic版本 1.0.2dev0

黑白底片上色

!python demo/mmagic_inference_demo.py \

--model-name inst_colorization \

--img test_colorization.jpg \

--result-out-dir out_colorization.png

文生图-Stable Diffusion

https://

github.com/open-mmlab/m

magic/tree/main/configs/stable_diffusion

from mmagic.apis import MMagicInferencer

sd_inferencer = MMagicInferencer(model_name='stable_diffusion')

# text_prompts = 'A panda is having dinner at KFC'

text_prompts = 'A Persian cat walking in the streets of New York'

sd_inferencer.infer(text=text_prompts, result_out_dir='output/sd_res.png')

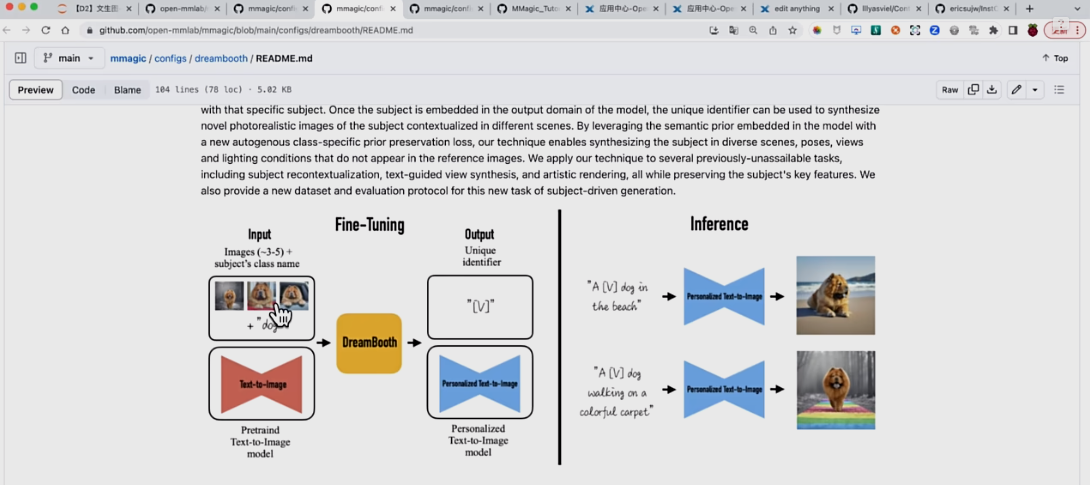

文生图-Dreambooth

# 通过lora的方式进行训练,训练的模型具体参数可以自己设置dreambooth-lora.py文件

bash tools/dist_train.sh configs/dreambooth/dreambooth-lora.py 1

#下面是进入python后的运行命令

from mmengine import Config

from mmagic.registry import MODELS

from mmagic.utils imaport register_all_modules

register_all_modules()

cfg = Config.fromfile('./mmagic/configs/dreambooth/dreambooth-loar.py')

dreambooth_lora = MODELS.build(cfg.model)

state = torch.load('mmagic/work_dirs/dreambooth-lora/iter_1000.pth')['state_dict']

def convert_state_dict(state):

state_dict_new = {}

for k, v in state.items():

if '.module' in k:

k_new = k.replace('.module', '')

else:

k_new = k

if 'vae' in k:

if 'to_q' in k:

k_new = k.replace('to_q', 'query')

elif 'to_k' in k:

k_new = k.replace('to_k', 'key')

elif 'to_v' in k:

k_new = k.replace('to_v', 'value')

elif 'to_out' in k:

k_new = k.replace('to_out.0', 'proj_attn')

state_dict_new[k_new] = v

return state_dict_new

dreambooth_lora.load_state_dict(convert_state_dict(state))

dreambooth_lora = dreambooth_lora.cuda()

samples = dreambooth_lora.infer('side view of sks dog', guidance_scale=5)

samples['samples'][0] #显示结果图像

图生图——ControlNet-Pose

import cv2

import numpy as npimport mmcv

from mmengine import Config

from PIL import Image

from mmagic.registry import MODELS

from mmagic.utils import register_all_modules

register_all_modules()

cfg = Config.fromfile('configs/controlnet/controlnet-pose.py')

# convert ControlNet's weight from SD-v1.5 to Counterfeit-v2.5

cfg.model.unet.from_pretrained = 'gsdf/Counterfeit-V2.5'

cfg.model.vae.from_pretrained = 'gsdf/Counterfeit-V2.5'

cfg.model.init_cfg['type'] = 'convert_from_unet'

controlnet = MODELS.build(cfg.model).cuda()

# call init_weights manually to convert weight

controlnet.init_weights()

prompt = 'masterpiece, best quality, sky, black hair, skirt, sailor collar, looking at viewer, short hair, building, bangs, neckerchief, long sleeves, cloudy sky, power lines, shirt, cityscape, pleated skirt, scenery, blunt bangs, city, night, black sailor collar, closed mouth'

control_url = 'https://user-images.githubusercontent.com/28132635/230380893-2eae68af-d610-4f7f-aa68-c2f22c2abf7e.png'

control_img = mmcv.imread(control_url)

control = Image.fromarray(control_img)

control.save('control.png')

output_dict = controlnet.infer(prompt, control=control, width=512, height=512, guidance_scale=7.5)

samples = output_dict['samples']

for idx, sample in enumerate(samples):

sample.save(f'sample_{idx}.png')

controls = output_dict['controls']

for idx, control in enumerate(controls):

control.save(f'control_{idx}.png')图生图——ControlNet-Canny

import cv2

import numpy as npimport mmcv

from mmengine import Config

from PIL import Image

from mmagic.registry import MODELS

from mmagic.utils import register_all_modules

register_all_modules()

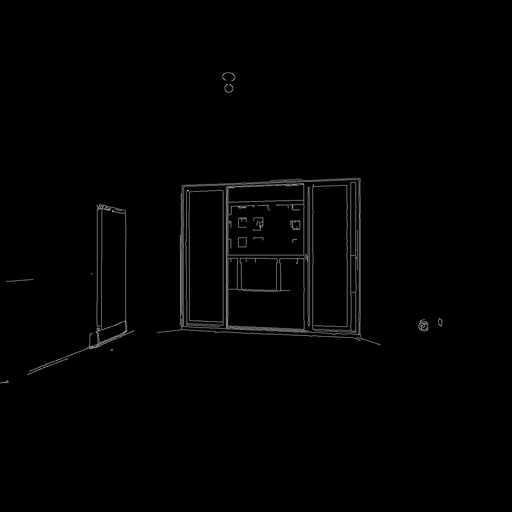

cfg = Config.fromfile('configs/controlnet/controlnet-canny.py')

controlnet = MODELS.build(cfg.model).cuda()

# 输入Canny边缘图

control_url = 'https://user-images.githubusercontent.com/28132635/230288866-99603172-04cb-47b3-8adb-d1aa532d1d2c.jpg' # 输入的图像路径

control_img = mmcv.imread(control_url)

control = cv2.canny(control_img, 100,200)

control = control[:, :, None]

control = np.concatenate([control]* 3, axis=2)

control = Image.fromarray(control)

prompt = 'Room with blue walls and a yellow ceiling.'

output_dict = controlnet.infer(prompt, control=control)

samples = output_dict['samples']

for idx, sample in enumerate(samples):

sample.save(f'sample_{idx].png')

controls = output_dict['controls']

for idx, control in enumerate(controls):

control.save( f'control_{idx}.png')

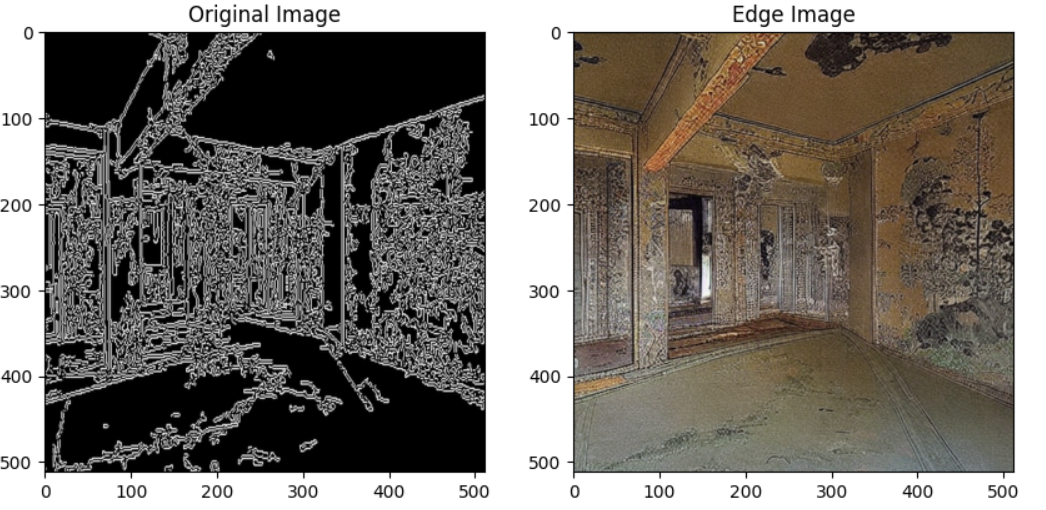

结果展示

import matplotlib.pyplot as plt

import cv2以彩色模式读取图片

original = cv2.imread('/content/mmagic/control_0.png', cv2.IMREAD_COLOR)

edges = cv2.imread('/content/mmagic/sample_0.png', cv2.IMREAD_COLOR)OpenCV使用BGR模式,而matplotlib使用RGB模式,所以我们需要转换颜色模式

original = cv2.cvtColor(original, cv2.COLOR_BGR2RGB)

edges = cv2.cvtColor(edges, cv2.COLOR_BGR2RGB)创建子图来展示对比

fig, axs = plt.subplots(1, 2, figsize=(10, 5))展示原图

axs[0].imshow(original)

axs[0].set_title('Original Image')展示处理后的图像

axs[1].imshow(edges)

axs[1].set_title('Edge Image')显示图像

plt.show()结果展示

图生图-ControlNet Animation

方式一:Gradio命令行

!python demo/gradio_controlnet_animation.py

# 点击URL,打开Gradio在线交互式网站,上传视频,执行预测

方式二:MMagic API

# 导入工具包

from mmagic.apis import MMagicInferencer

# Create a MMEdit instance and infer

editor = MMagicInferencer(model_name='controlnet_animation')

# 指定 prompt 咒语

prompt = 'a girl, black hair, T-shirt, smoking, best quality, extremely detailed'

negative_prompt = 'longbody, lowres, bad anatomy, bad hands, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality'

# 待测视频

# https://user-images.githubusercontent.com/12782558/227418400-80ad9123-7f8e-4c1a-8e19-0892ebad2a4f.mp4

video = '../run_forrest_frames_rename_resized.mp4'

save_path = '../output_video.mp4'

# 执行预测

editor.infer(video=video, prompt=prompt, image_width=512, image_height=512, negative_prompt=negative_prompt, save_path=save_path)

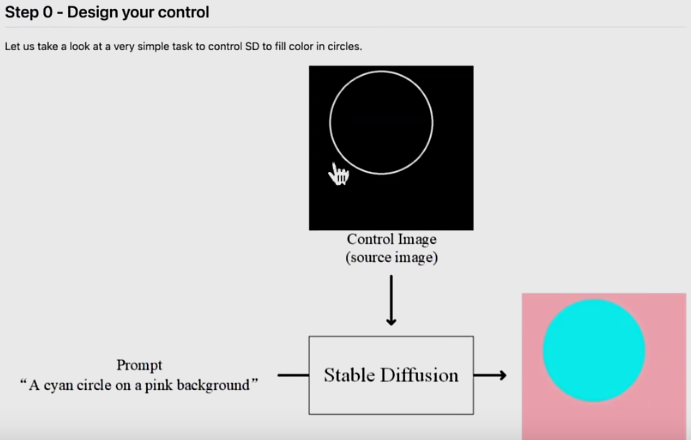

训练自己的ControlNet

# 下载数据集