来自济南的数学天才

韦东奕1991年出生,父母都是山东建筑大学的教授,其中父亲是数学教授,因此从小就对数学很感兴趣,展示出了过人的天赋。韦东奕消遣时光的方式,是抱着家里的数学书籍读到出神。

初二那年,因为数学天赋过人,韦东奕提前加入山师附中奥数训练队,和一群高中生们在一起训练。一年后他从初中免试直接升入山师附中。高一时期,顺利入选数学奥林匹克国家集训队。

在高中学习期间,参加过全国数学联赛,获得第一名;参加过在西班牙和德国举办的第49届和第50届国际数学奥林匹克竞赛,分别获得满分,此后被顺利保送到北京大学。

韦东奕在上大学期间又表现出了惊人的天赋。在2013年举办的丘成桐数学竞赛中,获得华罗庚数学奖;2013年获得陈省身金奖、林家翘金奖、许宝騄金奖;2013年大学生数学竞赛获得个人全能金奖。

IMO,国际数学奥林匹克大赛,一直被认为是五大学科竞赛中,含金量最高的比赛。而韦东奕在第49届IMO上的成绩是:6道大题全对,满分!

要知道,当年一起参与竞争的,是全世界500多名顶级选手,而最后只有三个人拿到了满分,韦东奕便是这三分之一,可以说一出道便是站上了世界之巅。

“与其他参加奥数学习的学生相比,他对数学的喜爱到了痴迷的程度。”韦东奕山师附中老师张永华曾这样说道。

放弃哈佛大学深造机会

2014年,韦东奕以优异的成绩从北大毕业,2018 年获得了北大博士学位。2017 到 2019 年在北京国际数学研究中心做博士后,2019 年 12 月之后留校,担任助理教授,可以说从大学到博士再到留校也只用了八九年时间。

2018年,在复旦大学举办的第一届微分方程博士生论坛上,成为全场唯一一位博士论文奖获得者。2018年,阿里巴巴举办的全球数学竞赛,他也获得了金奖。

而在江湖上流传着一段关于哈佛大学的“抢人”传说。哈佛曾给出了一系列令人心动的优待,甚至愿意为了韦东奕打破校规——只要韦东奕愿意来哈佛读书,可以直接免掉英语考试。

就在大家以为韦东奕会前往国外深造时,他却毅然留在了北大,并在这里读完了硕士,只用三年半就拿到博士学位,2019年,完成博士后工作的他,成为了北大数学系的助理教授。

北大数学系的传说

北大数学科学学院,号称中国的“第一学府第一系”——北大的学校代码是“10001”,全国所有高校中排第一;在北大,数学系的编号是“01”,在全校所有专业中排第一。

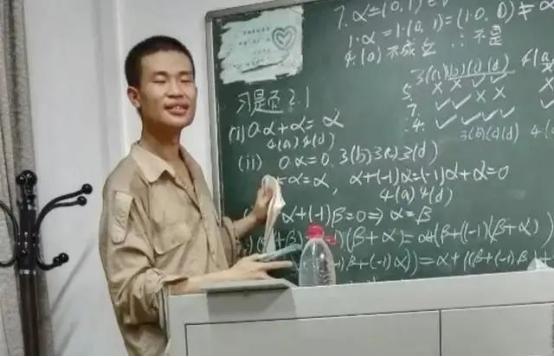

而北大数学系,还有另外一个名字“北大四大疯人院之首”——这里天才怪才云集,他们把世界上的一切元素都变成了数学符号。在一篇介绍北大数院的文章中,韦东奕被称为“疯人院教主”。

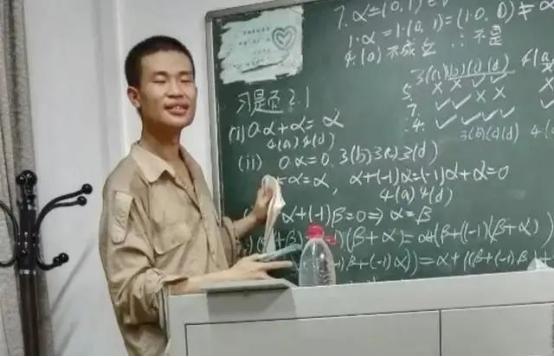

有一次习题课由韦东奕讲课,他讲完后,没听懂的大家请教授再讲一遍,而教授却微微一笑说:“不行,我也没听懂…”

在韦东奕担任助教的某门课中,教授这样给同学们介绍道:“如果你们有不会的习题可以问我,如果我不会可以问助教,如果连助教都不会那估计就是题目错了。”

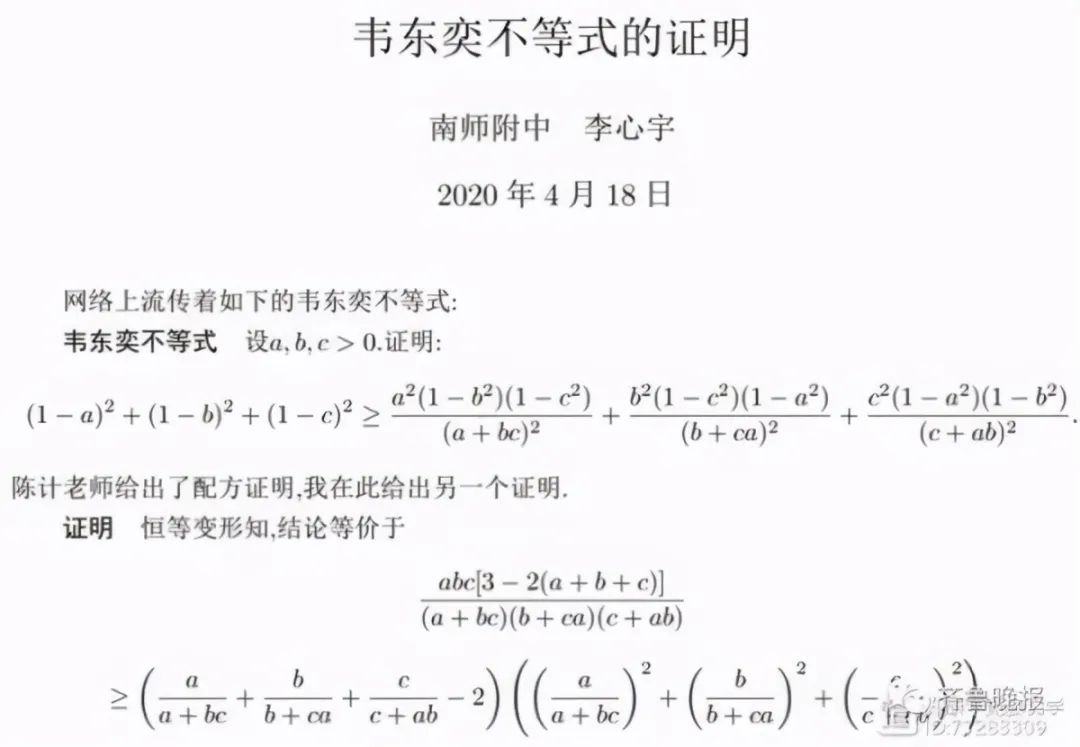

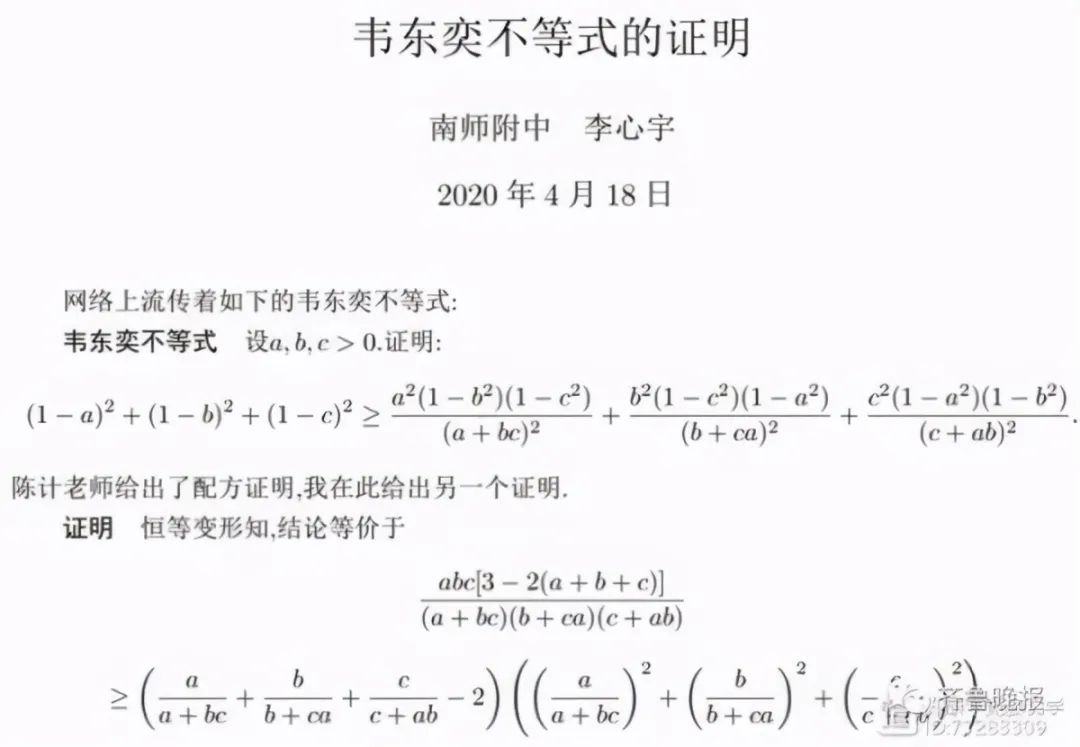

韦东奕解题的许多方法都是自创的,比标准答案还要简洁的多,被誉为“韦方法”,还流传有“韦东奕不等式”。据称,韦东奕不等式是韦东奕“玩” Jacobi 椭圆函数后得到的副产品,那一年他上高二。

而在读中学时,韦东奕的母亲曾接受采访表示:他从喜欢数学到今天获奖,都是顺其自然的结果,他其实是个很平凡的孩子,学习和生活上都有很多弱项,比如语文和英语科目较弱。

高中老师:

了解他的人,都觉得是天才

5月31日下午,山东商报·速豹新闻网记者采访到了“韦神”高中数学老师——山师附中张永华。在他高中老师看来,“韦神”就是天才。

当年韦东奕还在山师二附中上初中时,他的数学老师找到山师附中的张永华老师,很兴奋地告诉他,“我这里有一个好苗子,数学全满分”。

之前也有耳闻的张永华老师立即准备了一些题给韦东奕做,“一看确实厉害,那解题方法路子不是初中高中水平,远远超越我的预期。”

“高一一开学,有一个全国联赛,学校给他额外争取了一个名额,就是想试试,没想到一下子拿了一等奖。”张永华老师坦言,韦东奕在校期间数理化都非常优异,英语稍微差一些。不过他和同学关系很融洽,经常给同学讲题。

数学很枯燥,但是很重要。在张老师看来,各大行业,华为等高精尖企业都需要搞基础研究的人,而数学是一切科学的基础。

对于网上有人吐槽看上去以为是“傻子”,张永华老师坦言,韦东奕生活自理能力确实有一点欠缺,他太单纯了,内心和思想十分纯粹,脑子里全是跟数学有关。“不了解他的人,肯定不能理解他,但了解他内心世界的人,都会觉得他是个天才。要是让他静下心来搞研究,我敢肯定不出五六年的时间,他一定可以震惊世界。我太了解他了。”

原标题:《骄傲~北大数学系“大神”是济南人!毕业于山师附中!高中数学老师:静下心搞研究,他一定可以震惊世界!我太了解他了》